After Estate Outcry, OpenAI Blocks MLK Jr. From Sora Deepfakes

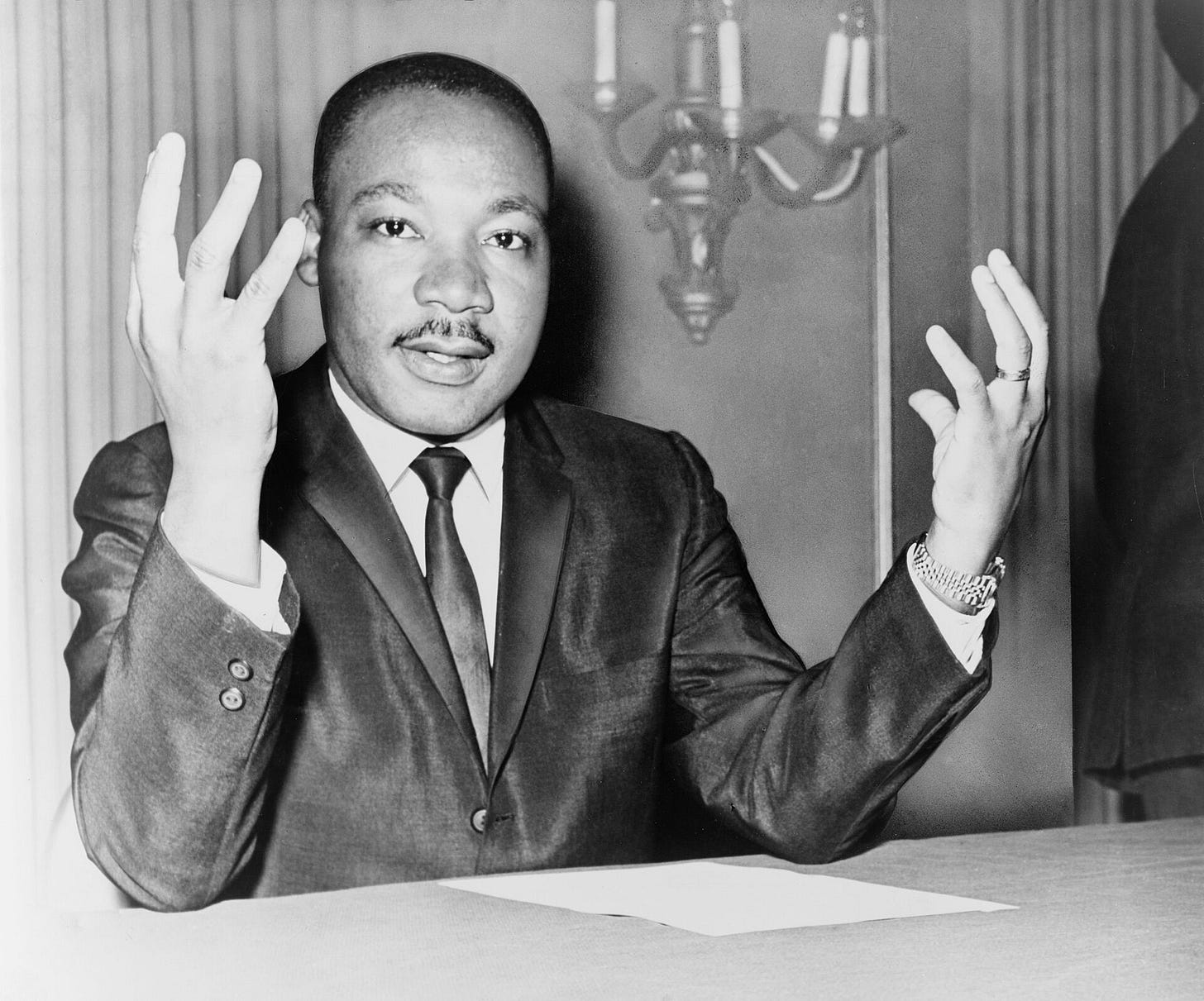

Artificial intelligence organization OpenAI announced that they have blocked users from creating videos of Rev. Dr. Martin Luther King Jr. following a number of “disrespectful depictions” using his likeness.

Across social media, the late civil rights activist has been used as the subject of deepfake videos, depicting him saying offensive, vulgar and racist dialogue. Several videos have also perpetuated racist stereotypes, depicting hyper-realistic videos of King stealing from a grocery store and running away from the police.

The videos are part of OpenAI's continued rollout of the Sora app. Launched three weeks ago, the Sora app is currently invite-only, but is poised to kickstart the beginning of AI-generated social media. The app resembles other vertical video social media platforms and enables users to create AI-generated videos featuring their own likeness, as well as that of celebrities and historical figures.

At the request of the King estate, OpenAI has now blocked users from using King in these AI deepfake videos posted on Sora.

“While there are strong free speech interests in depicting historical figures, OpenAI believes public figures and their families should ultimately have control over how their likeness is used,” said OpenAI per X. “Authorized representatives or estate owners can request that their likeness not be used in Sora cameos.OpenAI thanks Dr. Bernice A. King for reaching out on behalf of King, Inc., and John Hope Bryant and the AI Ethics Council for creating space for conversations like this.”

Although OpenAI has said that they will “strengthen guidelines,” several figures such as Princess Diana, Malcolm X, Kurt Cobain and John F. Kennedy have all been used to make deep-fake videos on Sora without getting explicit consent from their estate. Family members of public figures such as Robin Williams and King have already weighed in. Williams’ daughter Zelda recently took to social media, asking the public to stop sending her AI videos of her father. Bernice King soon chimed in, asking the public to “please stop.”

As AI continues to become embedded in society, several researchers have highlighted the issues that come along with using someone’s likeness. Generative AI often misleads audiences with synthetic videos and spreads harmful misinformation that not even refuting the reality of the video can mitigate. For those in charge of creating guidelines for its use, it is vital that they address concerns about the spread of misinformation.

For their part, last year, Senators introduced the Nurture Originals, Foster Art, and Keep Entertainment Safe Act, otherwise known as the NO FAKES Act. The act advocates for placing safeguards for the usage of people’s voices and images in projects without obtaining their consent.

Although it was recently reintroduced, the NO FAKES Act is still being considered and has not yet been passed.

By Veronika Lleshi